Hate Speech on Social Media: Global Comparisons

- Hate speech online has been linked to a global increase in violence toward minorities, including mass shootings, lynchings, and ethnic cleansing.

- Policies used to curb hate speech risk limiting free speech and are inconsistently enforced.

- Countries such as the United States grant social media companies broad powers in managing their content and enforcing hate speech rules. Others, including Germany, can force companies to remove posts within certain time periods.

Introduction

A mounting number of attacks on immigrants and other minorities has raised new concerns about the connection between inflammatory speech online and violent acts, as well as the role of corporations and the state in policing speech. Analysts say trends in hate crimes around the world echo changes in the political climate, and that social media can magnify discord. At their most extreme, rumors and invective disseminated online have contributed to violence ranging from lynchings to ethnic cleansing.

The response has been uneven, and the task of deciding what to censor, and how, has largely fallen to the handful of corporations that control the platforms on which much of the world now communicates. But these companies are constrained by domestic laws. In liberal democracies, these laws can serve to defuse discrimination and head off violence against minorities. But such laws can also be used to suppress minorities and dissidents.

How widespread is the problem?

More on:

Incidents have been reported on nearly every continent. Much of the world now communicates on social media, with nearly a third of the world’s population active on Facebook alone. As more and more people have moved online, experts say, individuals inclined toward racism, misogyny, or homophobia have found niches that can reinforce their views and goad them to violence. Social media platforms also offer violent actors the opportunity to publicize their acts.

Social scientists and others have observed how social media posts, and other online speech, can inspire acts of violence:

- In Germany a correlation was found between anti-refugee Facebook posts by the far-right Alternative for Germany party and attacks on refugees. Scholars Karsten Muller and Carlo Schwarz observed that upticks in attacks, such as arson and assault, followed spikes in hate-mongering posts.

- In the United States, perpetrators of recent white supremacist attacks have circulated among racist communities online, and also embraced social media to publicize their acts. Prosecutors said the Charleston church shooter, who killed nine black clergy and worshippers in June 2015, engaged in a “self-learning process” online that led him to believe that the goal of white supremacy required violent action.

- The 2018 Pittsburgh synagogue shooter was a participant in the social media network Gab, whose lax rules have attracted extremists banned by larger platforms. There, he espoused the conspiracy that Jews sought to bring immigrants into the United States, and render whites a minority, before killing eleven worshippers at a refugee-themed Shabbat service. This “great replacement” trope, which was heard at the white supremacist rally in Charlottesville, Virginia, a year prior and originates with the French far right, expresses demographic anxieties about nonwhite immigration and birth rates.

- The great replacement trope was in turn espoused by the perpetrator of the 2019 New Zealand mosque shootings, who killed forty-nine Muslims at prayer and sought to broadcast the attack on YouTube.

- In Myanmar, military leaders and Buddhist nationalists used social media to slur and demonize the Rohingya Muslim minority ahead of and during a campaign of ethnic cleansing. Though Rohingya comprised perhaps 2 percent of the population, ethnonationalists claimed that Rohingya would soon supplant the Buddhist majority. The UN fact-finding mission said, “Facebook has been a useful instrument for those seeking to spread hate, in a context where, for most users, Facebook is the Internet [PDF].”

- In India, lynch mobs and other types of communal violence, in many cases originating with rumors on WhatsApp groups, have been on the rise since the Hindu-nationalist Bharatiya Janata Party (BJP) came to power in 2014.

- Sri Lanka has similarly seen vigilantism inspired by rumors spread online, targeting the Tamil Muslim minority. During a spate of violence in March 2018, the government blocked access to Facebook and WhatsApp, as well as the messaging app Viber, for a week, saying that Facebook had not been sufficiently responsive during the emergency.

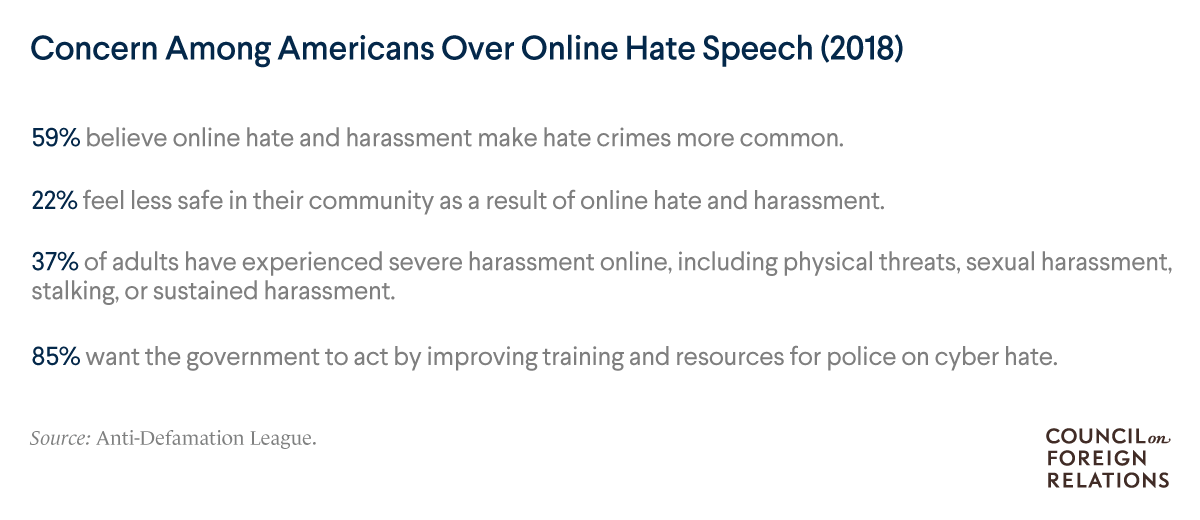

Does social media catalyze hate crimes?

The same technology that allows social media to galvanize democracy activists can be used by hate groups seeking to organize and recruit. It also allows fringe sites, including peddlers of conspiracies, to reach audiences far broader than their core readership. Online platforms’ business models depend on maximizing reading or viewing times. Since Facebook and similar platforms make their money by enabling advertisers to target audiences with extreme precision, it is in their interests to let people find the communities where they will spend the most time.

More on:

Users’ experiences online are mediated by algorithms designed to maximize their engagement, which often inadvertently promote extreme content. Some web watchdog groups say YouTube’s autoplay function, in which the player, at the end of one video, tees up a related one, can be especially pernicious. The algorithm drives people to videos that promote conspiracy theories or are otherwise “divisive, misleading or false,” according to a Wall Street Journal investigative report. “YouTube may be one of the most powerful radicalizing instruments of the 21st century,” writes sociologist Zeynep Tufekci.

YouTube said in June 2019 that changes to its recommendation algorithm made in January had halved views of videos deemed “borderline content” for spreading misinformation. At that time, the company also announced that it would remove neo-Nazi and white supremacist videos from its site. Yet the platform faced criticism that its efforts to curb hate speech do not go far enough. For instance, critics note that rather than removing videos that provoked homophobic harassment of a journalist, YouTube instead cut off the offending user from sharing in advertising revenue.

How do platforms enforce their rules?

Social media platforms rely on a combination of artificial intelligence, user reporting, and staff known as content moderators to enforce their rules regarding appropriate content. Moderators, however, are burdened by the sheer volume of content and the trauma that comes from sifting through disturbing posts, and social media companies don’t evenly devote resources across the many markets they serve.

A ProPublica investigation found that Facebook’s rules are opaque to users and inconsistently applied by its thousands of contractors charged with content moderation. (Facebook says there are fifteen thousand.) In many countries and disputed territories, such as the Palestinian territories, Kashmir, and Crimea, activists and journalists have found themselves censored, as Facebook has sought to maintain access to national markets or to insulate itself from legal liability. “The company’s hate-speech rules tend to favor elites and governments over grassroots activists and racial minorities,” ProPublica found.

Addressing the challenges of navigating varying legal systems and standards around the world—and facing investigations by several governments—Facebook CEO Mark Zuckerberg called for global regulations to establish baseline content, electoral integrity, privacy, and data standards.

Problems also arise when platforms’ artificial intelligence is poorly adapted to local languages and companies have invested little in staff fluent in them. This was particularly acute in Myanmar, where, Reuters reported, Facebook employed just two Burmese speakers as of early 2015. After a series of anti-Muslim violence began in 2012, experts warned of the fertile environment ultranationalist Buddhist monks found on Facebook for disseminating hate speech to an audience newly connected to the internet after decades under a closed autocratic system.

Facebook admitted it had done too little after seven hundred thousand Rohingya were driven to Bangladesh and a UN human rights panel singled out the company in a report saying Myanmar’s security forces should be investigated for genocidal intent. In August 2018, it banned military officials from the platform and pledged to increase the number of moderators fluent in the local language.

How do countries regulate hate speech online?

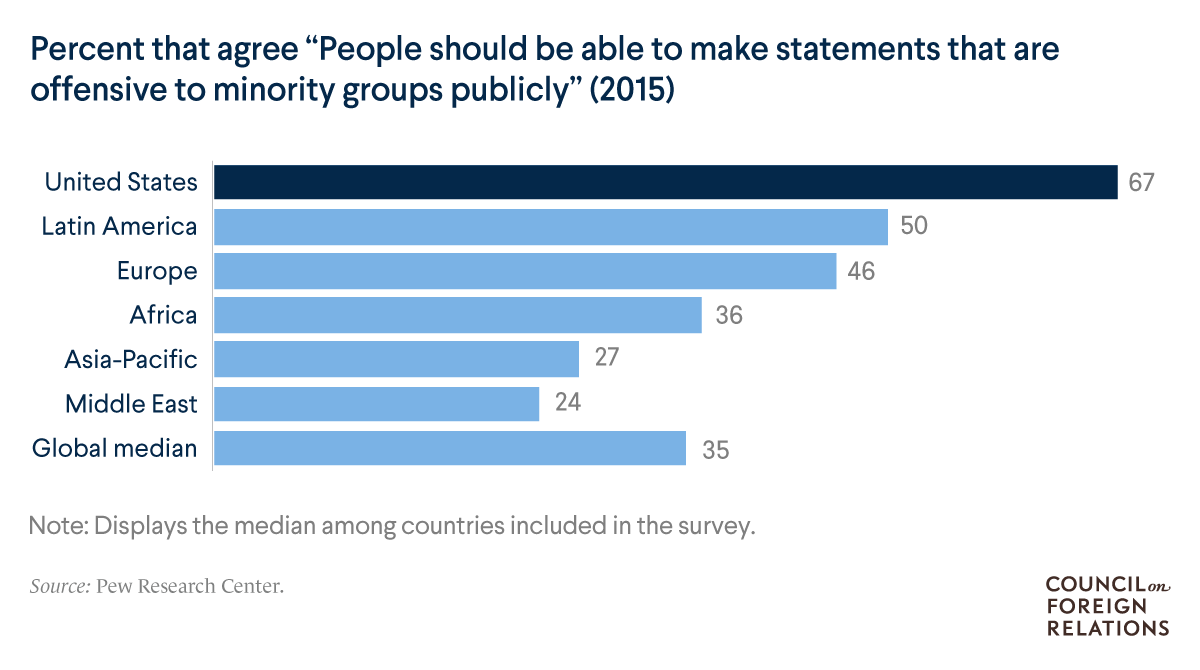

In many ways, the debates confronting courts, legislatures, and publics about how to reconcile the competing values of free expression and nondiscrimination have been around for a century or longer. Democracies have varied in their philosophical approaches to these questions, as rapidly changing communications technologies have raised technical challenges of monitoring and responding to incitement and dangerous disinformation.

United States. Social media platforms have broad latitude [PDF], each establishing its own standards for content and methods of enforcement. Their broad discretion stems from the Communications Decency Act. The 1996 law exempts tech platforms from liability for actionable speech by their users. Magazines and television networks, for example, can be sued for publishing defamatory information they know to be false; social media platforms cannot be found similarly liable for content they host.

Recent congressional hearings have highlighted the chasm between Democrats and Republicans on the issue. House Judiciary Committee Chairman Jerry Nadler convened a hearing in the aftermath of the New Zealand attack, saying the internet has aided white nationalism’s international proliferation. “The President’s rhetoric fans the flames with language that—whether intentional or not—may motivate and embolden white supremacist movements,” he said, a charge Republicans on the panel disputed. The Senate Judiciary Committee, led by Ted Cruz, held a nearly simultaneous hearing in which he alleged that major social media companies’ rules disproportionately censor conservative speech, threatening the platforms with federal regulation. Democrats on that panel said Republicans seek to weaken policies dealing with hate speech and disinformation that instead ought to be strengthened.

European Union. The bloc’s twenty-eight members all legislate the issue of hate speech on social media differently, but they adhere to some common principles. Unlike the United States, it is not only speech that directly incites violence that comes under scrutiny; so too does speech that incites hatred or denies or minimizes genocide and crimes against humanity. Backlash against the millions of predominantly Muslim migrants and refugees who have arrived in Europe in recent years has made this a particularly salient issue, as has an uptick in anti-Semitic incidents in countries including France, Germany, and the United Kingdom.

In a bid to preempt bloc-wide legislation, major tech companies agreed to a code of conduct with the European Union in which they pledged to review posts flagged by users and take down those that violate EU standards within twenty-four hours. In a February 2019 review, the European Commission found that social media platforms were meeting this requirement in three-quarters of cases.

The Nazi legacy has made Germany especially sensitive to hate speech. A 2018 law requires large social media platforms to take down posts that are “manifestly illegal” under criteria set out in German law within twenty-four hours. Human Rights Watch raised concerns that the threat of hefty fines would encourage the social media platforms to be “overzealous censors.”

New regulations under consideration by the bloc’s executive arm would extend a model similar to Germany’s across the EU, with the intent of “preventing the dissemination of terrorist content online.” Civil libertarians have warned against the measure for its “vague and broad” definitions of prohibited content, as well as for making private corporations, rather than public authorities, the arbiters of censorship.

India. Under new social media rules, the government can order platforms to take down posts within twenty-four hours based on a wide range of offenses, as well as to obtain the identity of the user. As social media platforms have made efforts to stanch the sort of speech that has led to vigilante violence, lawmakers from the ruling BJP have accused them of censoring content in a politically discriminatory manner, disproportionately suspending right-wing accounts, and thus undermining Indian democracy. Critics of the BJP accuse it of deflecting blame from party elites to the platforms hosting them. As of April 2018, the New Delhi–based Association for Democratic Reforms had identified fifty-eight lawmakers facing hate speech cases, including twenty-seven from the ruling BJP. The opposition has expressed unease with potential government intrusions into privacy.

Japan. Hate speech has become a subject of legislation and jurisprudence in Japan in the past decade [PDF], as anti-racism activists have challenged ultranationalist agitation against ethnic Koreans. This attention to the issue attracted a rebuke from the UN Committee on the Elimination of Racial Discrimination in 2014 and inspired a national ban on hate speech in 2016, with the government adopting a model similar to Europe’s. Rather than specify criminal penalties, however, it delegates to municipal governments the responsibility “to eliminate unjust discriminatory words and deeds against People from Outside Japan.” A handful of recent cases concerning ethnic Koreans could pose a test: in one, the Osaka government ordered a website containing videos deemed hateful taken down, and in Kanagawa and Okinawa Prefectures courts have fined individuals convicted of defaming ethnic Koreans in anonymous online posts.

What are the prospects for international prosecution?

Cases of genocide and crimes against humanity could be the next frontier of social media jurisprudence, drawing on precedents set in Nuremberg and Rwanda. The Nuremberg trials in post-Nazi Germany convicted the publisher of the newspaper Der Sturmer; the 1948 Genocide Convention subsequently included “direct and public incitement to commit genocide” as a crime. During the UN International Criminal Tribunal for Rwanda, two media executives were convicted on those grounds. As prosecutors look ahead to potential genocide and war crimes tribunals for cases such as Myanmar, social media users with mass followings could be found similarly criminally liable.

Recommended Resources

Andrew Sellars sorts through attempts to define hate speech.

Columbia University compiles relevant case law from around the world.

The U.S. Holocaust Memorial Museum lays out the legal history of incitement to genocide.

Kate Klonick describes how private platforms have come to govern public speech.

Timothy McLaughlin chronicles Facebook’s role in atrocities against Rohingya in Myanmar.

Adrian Chen reports on the psychological toll of content moderation on contract workers.

Tarleton Gillespie discusses the politics of content moderation.

Online Store

Online Store